Install Huzzler App

Install our app for a better experience and quick access to Huzzler.

Join the Huzzler founder community

A community where real founders build together. No fake gurus, no AI spam.

Join 3,117 founders

Huzzler is a strictly AI-free community

No fake MRR screenshots. Stripe-verified revenue only

Real advice from founders who've actually built

Network with serious builders, not wannabes

Most founders and indie hackers have a personal site that looks decent — but quietly underperforms. The real issue isn't design. It's that the site doesn't answer three basic questions in the first 5 seconds:

— Who do you help? — What outcome do you create? — Why should anyone trust you?

Here's the structure that fixes it 👇

1. Sharp headline — specific audience + specific outcome. No "passionate professional" fluff. 2. Proof up front — don't make people scroll to find your credibility. 3. Clear services — scope, timeline, what's included. Vague = low-quality leads. 4. Real case snippets — before/after beats generic praise every time. 5. One CTA — one. Not three. One.

The compounding trick most people miss 💡

Write one useful insight per week → turn it into a LinkedIn post → drop it in a relevant community thread. Same message, three touchpoints, zero extra effort. When your content and homepage say the same thing, trust builds fast.

Treat your site as a product, not a resume. Ship small updates weekly. Review monthly. It gets better every iteration — just like your actual product. 🛠️

👉 Full 30-day execution plan here:https://unicornplatform.com/blog/boost-your-brand-with-a-personal-professional-website-guide/

What's the one thing holding your personal site back right now? Let's debug it in the comments 👇

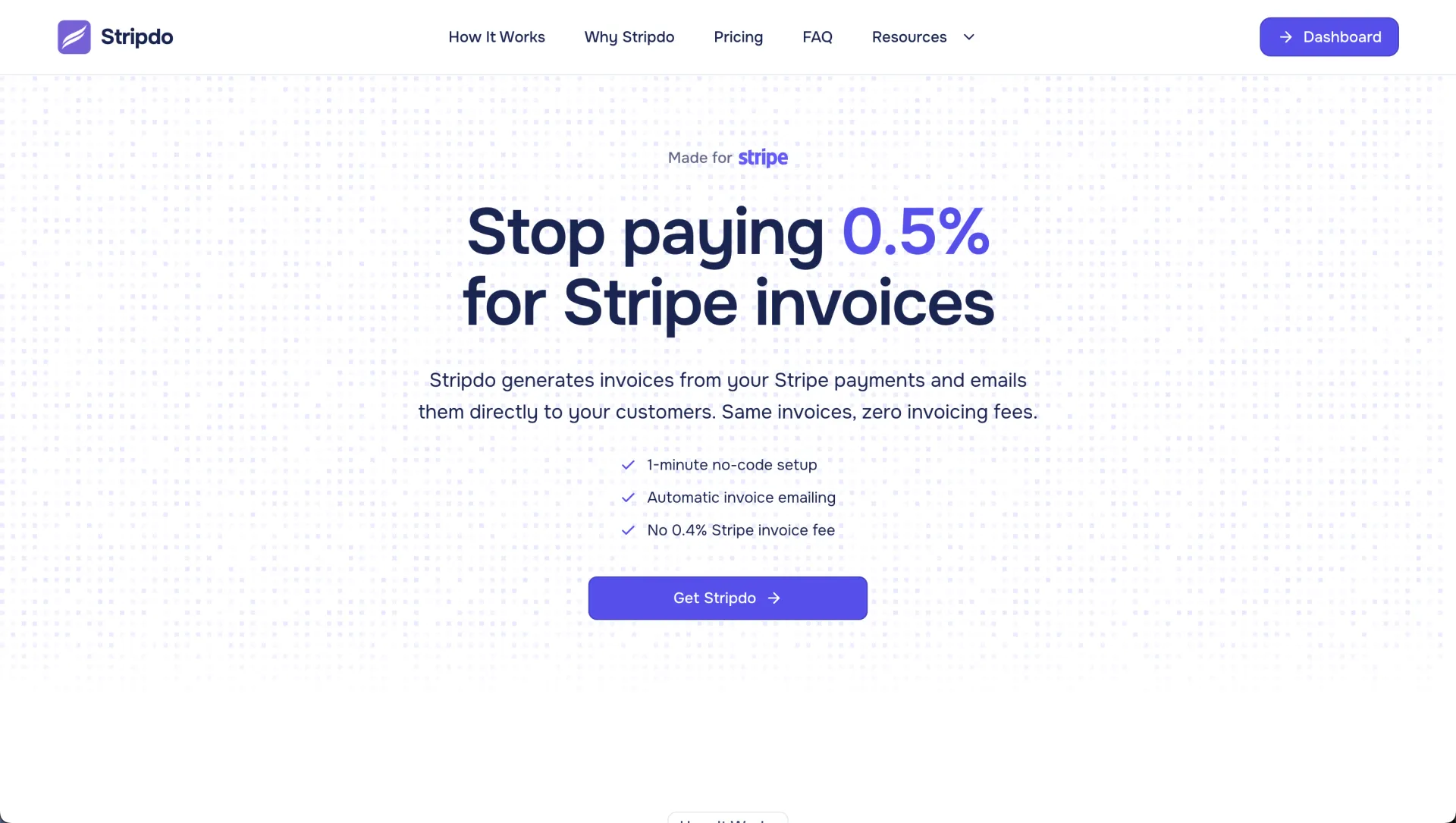

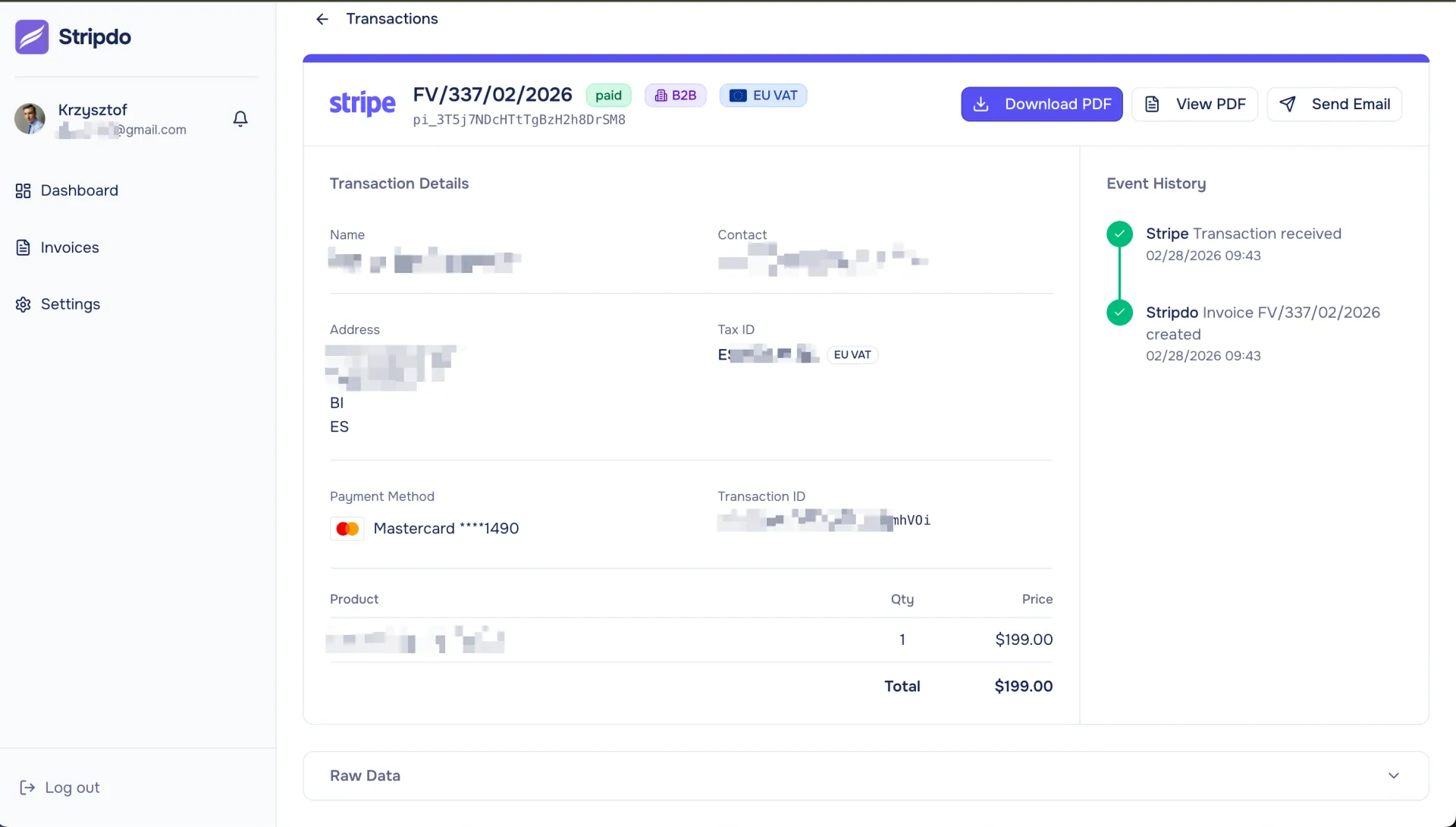

While working on a few projects using Stripe, I noticed something frustrating.

When you receive a payment, Stripe already takes ~2.9% + $0.30 in processing fees.

But if you want to send the customer an invoice using Stripe Invoicing, they charge an additional 0.4% per invoice sent (max $2) - just to generate the PDF and send the email!!

Example:

$100 payment

- ~$3.20 processing fee

- ~$0.40 invoice fee

It doesn’t sound like much, but it adds up quickly.

If you have 100 customers and send 12 invoices per year, that's 1,200 invoices.

At up to $2 per invoice, that can be $2,400/year just for sending PDFs, separate from transaction fees 🤯🤯🤯🤯

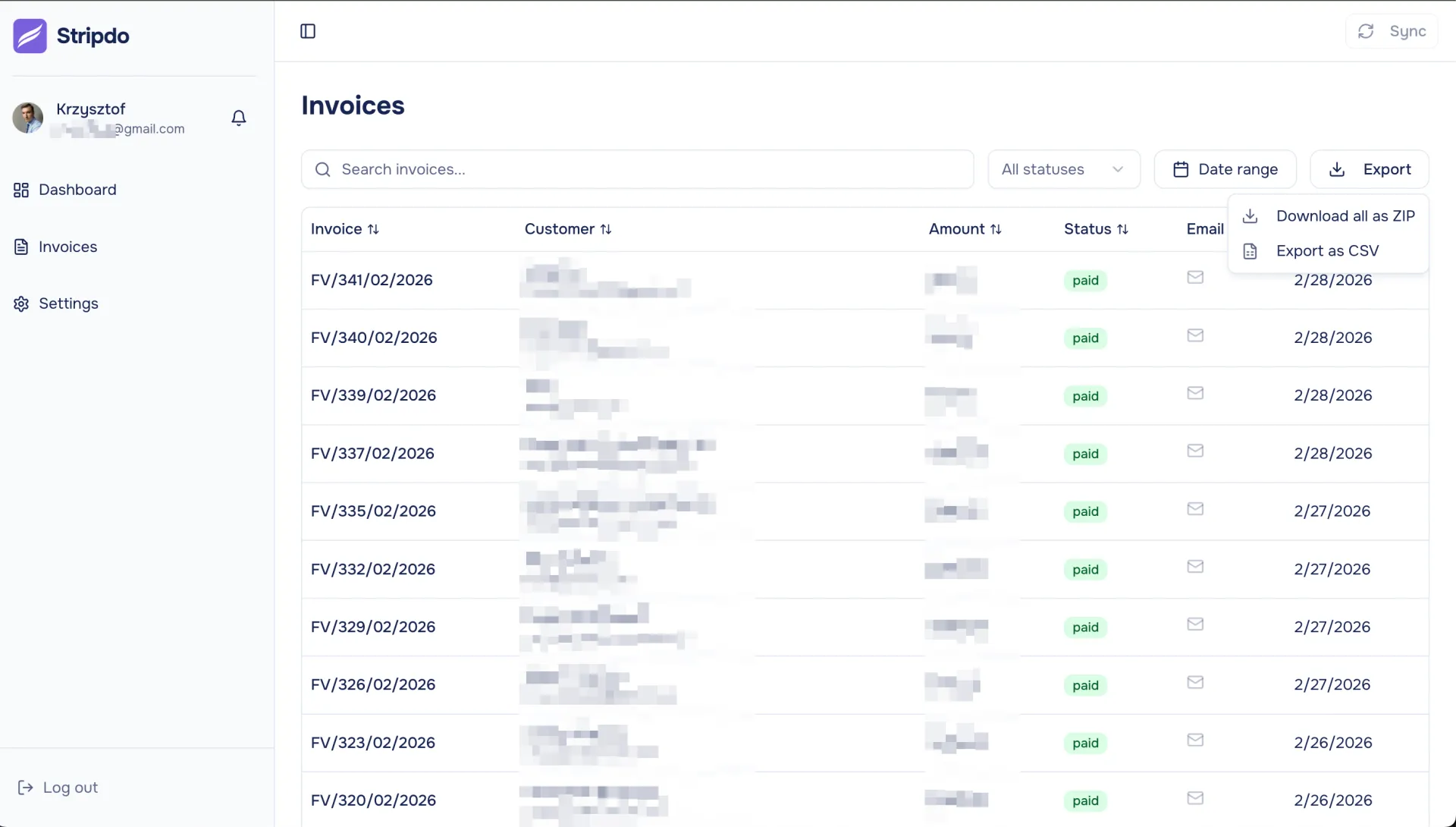

So I built a small tool that generates invoices directly from Stripe payments without using Stripe’s invoicing system.

So I built a small tool that generates invoices directly from Stripe payments without using Stripe’s invoicing system.

On top of that it also:

- Automatically detects EU VAT numbers

- Applies reverse charge when applicable

- Supports European and US invoice formats

- Lets you download all invoices in bulk

I originally built it for my own projects but decided to release it:

Google just patented something that could seriously reshape the internet.

Under patent US12536233B1, Google can evaluate your landing page and, if it decides it’s “not good enough,” generate its own AI version of it and show that to users directly inside search results. Not send them to your site.

Not just summarize it.

Actually replace the experience with an AI-built page.

The wild part? It can personalize that version to each user price-sensitive shoppers might see one version, brand-loyal customers another. And the patent covers both ads and organic search, starting with Shopping.

So imagine ranking #1 for a keyword, a user clicking your result… and instead of landing on your site, they’re shown Google’s AI-rendered version of it. You may still get the attribution, but you lose control of the message, layout, and conversion path.

Search engines used to index the web. Then they started answering it.

Now they might start recreating it.

If the click becomes optional, the entire traffic economy changes.

source: https://www.seroundtable.com/google-patent-ai-generated-pages-search-41010.html?

You know those TikToks and YouTube Shorts with some random parkour or Minecraft gameplay in the background, and an AI voice reading a dramatic story? A few months ago, I fell down that rabbit hole and thought, "Hey, I could do that."

So I tried making a few.

The first one was fun. The second one was okay. By the tenth time I had to copy-paste a post, clean up the formatting, generate a voiceover, find some background footage, and then painstakingly sync the subtitles... it got old. Really old.

The process was always a grind, and the bottlenecks were always the same:

- Cleaning up the original post to make it work as a script.

- Manually timing the subtitles to match the narration's pacing.

- Finding background video that wasn't distractingly bad.

- Making the whole thing feel "native" to short-form video.

Since I'm more of a coder than a video editor, my brain went straight to one place: "Why don't I just automate this whole damn thing?"

So, that's what I did. I started building a simple tool that:

Rewrites the story into a script that’s paced for a short video.

Generates an AI voiceover.

Adds automatically synced captions.

Slaps it all on top of some looping, "viral-style" background video.

Basically, it turns a URL into a ready-to-post faceless video. What started as a personal script has now become a simple web tool.

It's still a work-in-progress, and I'm constantly tinkering. The next big challenges are:

- Better Hooks: How do you actually get someone to stop scrolling in the first 3 seconds?

- Better Voices: Making the AI voices sound less... well, AI.

- Smarter Subtitles: It's not just about syncing; it's about emphasizing the right words at the right time.

- More Variety: What are the options beyond Minecraft parkour? (Seriously, I need ideas!)

This has been a surprisingly fun challenge, especially figuring out the async media processing and making the rendering stable.

If there's anyone else here building tools for creators or in the short-form video space, I'd love to connect and trade notes.

And of course, I'd love to get your feedback. If you were to use a tool like this, what's the #1 feature you feel is missing from the current landscape?

Thanks for reading!

Hi there! The December algorithm update affected my website traffic. The site is a wholesale produce business in NYC. What ca I do to improve the website organic traffic?